Extract Data from Multiple Web Pages into Excel using import.io

In this tutorial, I will show you how to extract or scrape data from multiple web pages of a website or blog and save the extracted data into Excel spreadsheet for further processing.There are various methods and tools to do that but I found them complicated and I prefer to use import.io to accomplish the task.Import.io doesn’t require you to have programming skills.The platform is quite powerful,user-friendly with a lot of support online and above all FREE to use.

You can use the online version of their data extraction software or a desktop application.The online version will be covered in this tutorial.

Let us get started.

Table of Contents

Step 1:Find a web page you want to scrape data from.

You can extract data such as prices, images, authors’ names, addresses,dates etc

In this tutorial, I will use bongo5.com.

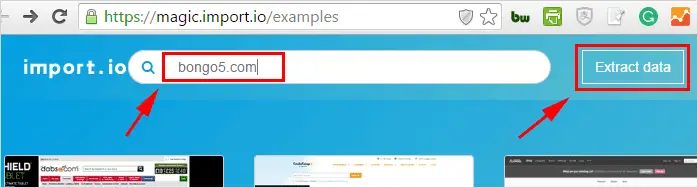

Step 2:Enter the URL for that web page into the text box here and click “Extract data”.

Textbox link: https://magic.import.io/

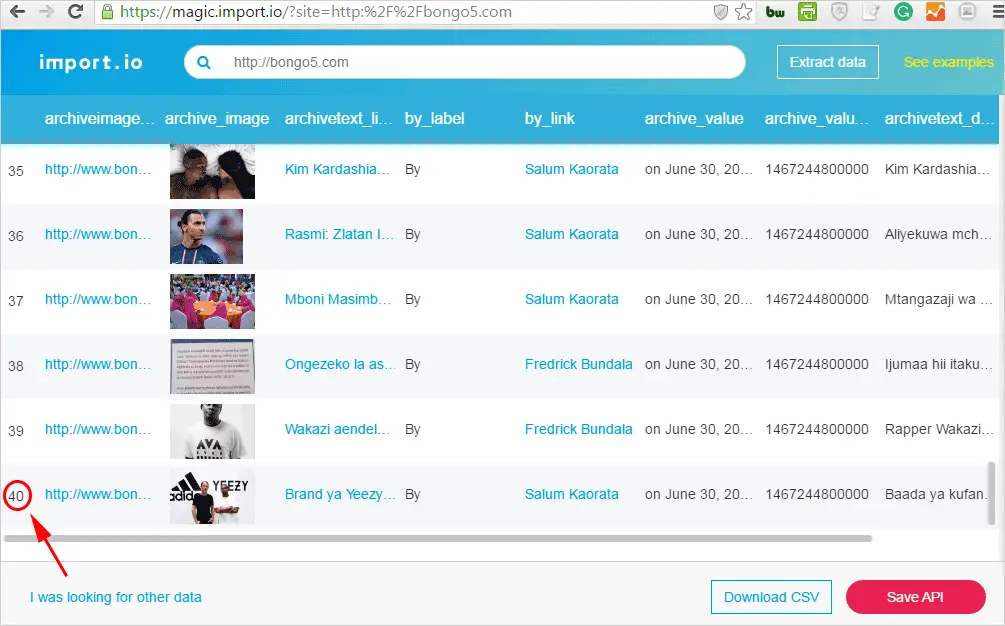

Then click “Extract data” Import.io will transform the web page into data in seconds.Data such as authors,images,posts published dates and posts title will be pulled from the web page as shown in the image below.

Import.io extracted only 40 posts or articles from the first page of the blog!.

If you visit bongo5.com you will notice that the web page is having a total of 600+ pages at the time of writing this article and each page has 40 posts or articles on it as can be shown by the image below.

Next step will show you how to extract data from multiple pages of the web page into Excel.

Step 3:Download Data from Multiple Web Pages into Excel

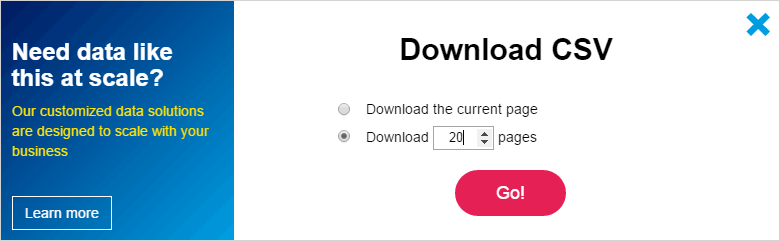

Using the import.io online tool you can extract data from 20 web pages maximum.Go to the bottom right corner of the import.io online tool page and click “Download CSV” to save the extracted data from those 20 pages into Excel.

Note:Using the import.io desktop application you can extract an unlimited number of pages and pin point only the data you want to extract.Check out this tutorial on how to use the desktop application.

Once you click “Download CSV” the following pop up window will appear.You can specify the number of pages you want to get data from up to a maximum of 20 pages then click “Go!”

You will need to Sign up for a free account to download that data as a CSV, or save it as an API.If you save it as an API you can go back to the API later to extract new data if the web page is updated without the need to repeat the steps we have done so far.Also, you can use the API for integration into other platforms.

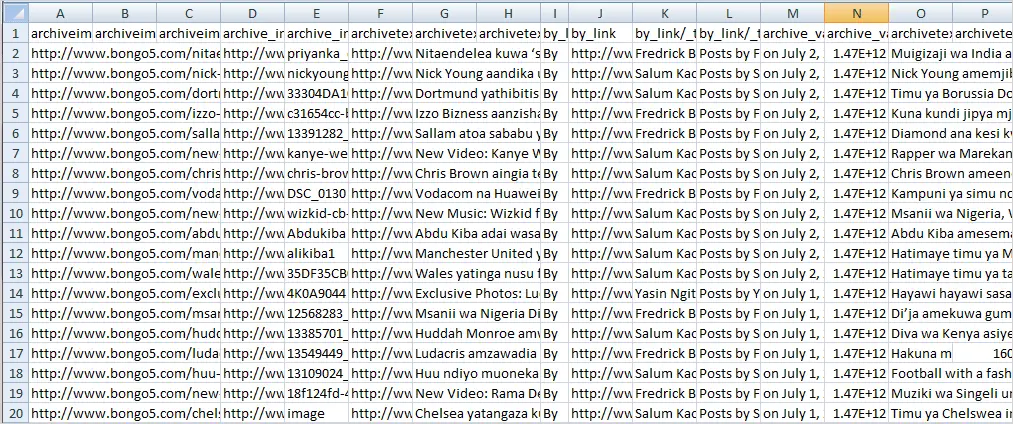

Below image shows 20 rows out of 800 rows of data extracted from the 20 pages of the web page.

Conclusion

The online tool doesn’t offer much flexibility than the desktop application.For example, you can not extract more than 20 pages and you can not pin point the type of data you want to extract.For a more advanced tutorial on how to use the desktop application, you can check out this tutorial I created earlier.

You might also be interested in how to extract data from Facebook and Twitter to Excel.